Sprint 2 - Install & Configure

Installation

The focus for Sprint 2 was to get a basic “hello world” example running on my Oculus Quest. This type of program is normally trivial but it fleshes out correct configuration of the hardware and software environment, laying the foundations for more interesting projects further down the line and getting confidence with the tooling.

The first challenge was to settle on a version of Unity. The aforementioned Coursera XR intro course suggested fixing to an older version but that was restrictive for other online tutorials so I settled on 2018.4, installed via the Unity Hub. That also meant I no longer needed the Android Studio app and accompanying SDK as it’s now included in Unity as a module via the Unity Hub. I did manage to build and deploy the “VR campus” app from within the XR course but I quickly realised I needed to start with a more basic example that required less hacks and libraries.

The installation was much more of a dance than it sounds as my Internet connection is “challenged” at home so I needed to go find a café with a good connection and ideally, only install the essentials. You really notice how much development tooling assumes a solid connection when you don’t have one!

I generally found the guides from Unity / Oculus not only sufficient but usually better than third party tutorials. The caveat with the Unity guides is the availability of so many versions of their software and help docs. You need to be really careful what version of the docs you’re reading after following Google links. I guess this really highlights the pace at which the industry is moving right now!

Hello World

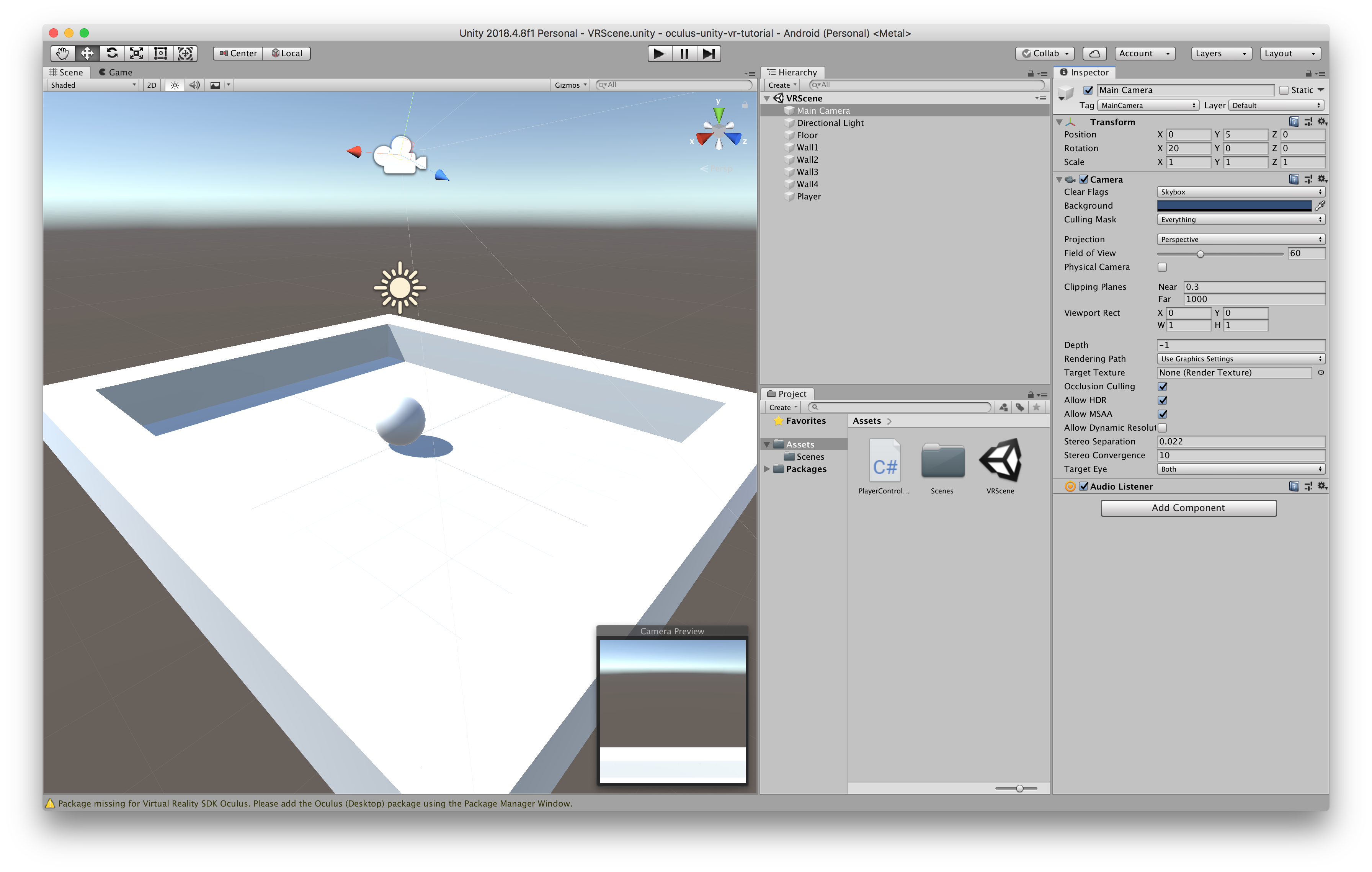

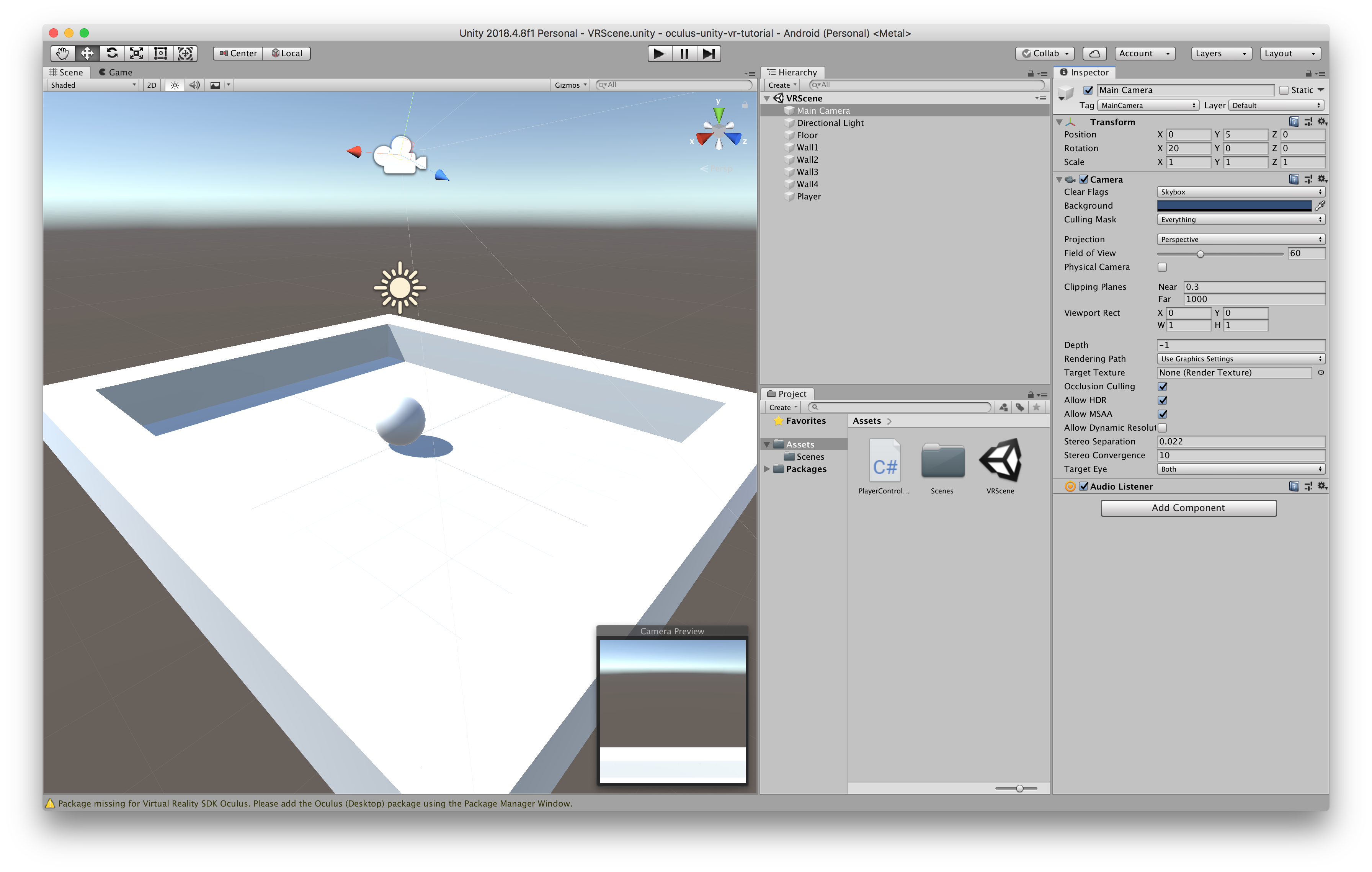

Once everything was configured I followed the Oculus Build Your First VR App tutorial from the Getting Started docs. This also guides you in creating basic Game Objects in Unity– useful if like me, you didn’t have too much game dev experience before starting to learn XR. It didn’t take long to develop the app and I got it deployed to my headset following the latter part of this Quest dev tutorial - success!

That’s a great start but I already began to wonder about the development feedback cycle. I’m familiar with using tooling (REPLs, live coding etc), tests and the running application to trial my ideas with quick feedback. Do I really have to build and deploy an Android Package (APK) to the device to test it out? The short answer is yes, I’m afraid. The situation is different for a Rift as you might expect with it being a tethered device. More about that in a later post, probably..

Inspiration

Alongside the techy stuff, I also reached out to my network and beyond to anyone working in XR related fields for advice. This started with my third mentor meeting, hosted by Irini Papadimitriou, ex V&A digital programmes manager and now creative director of the brilliant Future Everything festival in Manchester - an organisation I’ve collaborated with in the past. Irini’s mentor role on this project is as a curator and producer. Her enthusiasm was infectious in our meeting and she gave me an extensive brain dump of XR projects she’d experienced.

From Irini’s suggestions there were too many great projects to mention but a couple stood out. The first was Hack The Senses and their 2017 V&A collaboration. The first two installations had a lot of crossover with my own interests - particularly the sensory perception topics I touched on in the previous post. The second was the celebrated Notes On Blindness recordings, film and accompanying VR experience. The film is based around John Hull’s audio diaries that he kept whilst losing his sight. The diaries are an articulate, compelling reflection on his situation and the changes to the world as he perceives it. Following a short film and subsequent BAFTA winning feature film, the VR experience is a triumph - a spellbinding narrative delivered using the strengths of immersive technology. The scenes are visually opaque, abstract yet magical. Careful 3D sound design really accentuates the diary narrative and helps to place the listener inside John’s story and experience - well, as much as anything can hope to. Smart, subtle gestures highlight or reveal parts of the scene. I particularly liked it when John was talking theoretically about the sound of rain hitting an object being useful in emphasising and positioning it with space and lamenting the lack of indoor rain for the same purpose. The VR creation of this scene gradually reveals each new object in the room visually and, more importantly, sonically through gaze based gestures. This additive process subtly forges a wonderful rhythmic Music Concrète soundtrack. The 3D sound design here particularly shines. I really can’t recommend this story, film and immersive experience enough!

3D Sound

Talking of 3D sound, I reached out to Tom Slater at Call and Response, another organisation I worked with a few times in the past. They curated an 8 channel surround sound sonic art programme for our Netaudio festival way back. I also mixed an ambisonic release for Zimoun’s Leerraum project at their Deptford 18.1 studio and completed a great ambisonic workshop they ran last year. Alas, logistics prevented us meeting in person so far but I was pleased to read they were successfully awarded funding for exciting projects from Creative XR and The Jerwood Foundation and hope to follow up later.

Industry XR

Lastly, I got some good insight into commercial XR and game development industry from my old friend Craig Gabell who co-founded the Brighton based West Pier Studios. Of particular interest was the mixed use of VR / AR technology in the My Virtual Space application they developed as a tool for exploring and customising environments.

published on 12 Sep 2019

This project is gratefully supported by the Arts Council England as part of their DYCP. fund.